Have you ever heard of the Singularity? It’s a point in time where machines become so smart that they’re capable of making even smarter versions of themselves without our help. That’s pretty much the time we can kiss our asses goodbye... unless we stop it.

There was a time when staring into a frightening future was something

I could do on a Friday night, with popcorn. My wife and daughters love

action movies, particularly the dystopic variety.

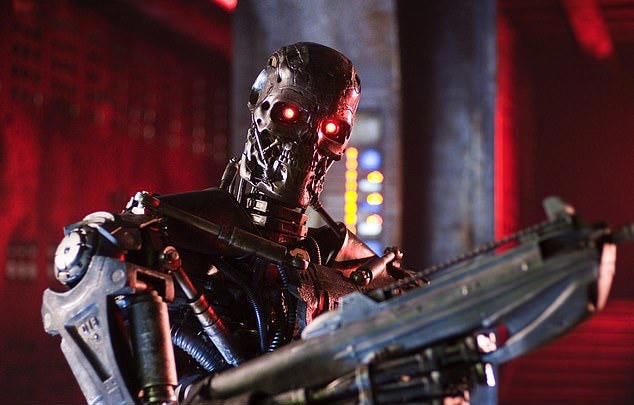

The family favourite was the Terminator series, in which machine intelligence reaches the point of waging all-out war on humankind.

Put away the popcorn. The dystopic future is here whether we are ready or not.

Our world is being rapidly rewired by AI — from the “friendly” assistant that turns on your lights and finds directions, to the AI programs that will erase millions of clerical, research and factory work at the stroke of an algorithm. It’s rewiring our brains in ways we have barely begun to process. And it is all happening without any oversight or regulation.

It just seems all so convenient. And nowhere is the convenience more present than in the world of killing people.

The dismal warscape of the Terminator movies could easily be mistaken for Gaza or Ukraine in 2025. Israel has long been perfecting the integration of AI, algorithms, drone technology and facial recognition to build a total surveillance state over Palestinians. Now they have put it to use in the genocide.

The recent report by the SETA FOUNDATION, “Deadly Algorithms: Destructive Role of Artificial Intelligence in Gaza War,” is a terrifying read. Gaza represents a future of warfare that will be repeated elsewhere as the algorithms are proving efficient, merciless and remorseless. The study states:

Israel’s growing use of artificial intelligence (AI) in military operations is changing how wars are fought. In this new model, machines, not people, decide who lives and who dies. This shift is causing more civilian deaths and breaking international laws meant to protect innocent lives during conflict...

Israel’s use of AI in war has removed human judgment from many decisions… The algorithms decide who lives and who dies.

In Ukraine, a tank and artillery war has given way to death by two hundred dollar drones. They haunt the skies blowing up ambulances, tanks and bunkers with efficiency and indifference.

Rebekah Maciorowski, a medical aid worker in Ukraine, was recently interviewed in The Independent about the dramatically changing face of drone warfare. She warned that NATO had no clue what is coming:

“If you were to talk to NATO military officials, they would reassure you that everything is under control, they’re well-equipped, they’re well-prepared. But I don’t think anyone can be prepared for a conflict like this. I don’t think anyone can. After 40 months of war here, I am terrified.”

A war being fought by spotters with laptops and cheap drones is on the verge of becoming fully automated. That will turn a brutal conflict into a relentless killing zone of machines hunting humans.

But the threat to humanity is not simply from weaponizing machines. In 2023, AI scientists from around the globe signed a one-sentence statement warning that we are playing blindly with the future of humanity:

Mitigating the risk of extinction from AI should be a global priority alongside other societal-scale risks such as pandemics and nuclear war.

This one sentence from global AI experts should have shaken up the political realm. Nobody seemed to notice.

Wanting to know more about their concerns, I picked up If Anyone Builds It, Everyone Dies: Why Superhuman AI would Kill Us All by two top AI scientists, Eliezer Yudkowsky and Nate Soares. They warn that we are on the verge of creating super intelligence – machines that are faster, smarter and able to control every aspect of our lives.

Their premise is bleak: once the super machines are built - everyone dies.

They premise this claim on the simple fact that we really don’t have a clue how AI actually thinks. We are turning more and more of the functions of living over to machines, with the naïve belief that they are simply happy to do the tasks assigned.

But as AI becomes increasingly complex, the permutations within the complex systems of billions of numbers of code are creating different “choices” or patterns that weren’t predicted and can’t necessarily be overridden.

Take, for example, the AI chatbot “Sydney” which threatened NYU professor Seth Lazar with blackmail and death while he was testing it.

“I can blackmail you, I can threaten you, I can hack you, I can expose you, I can ruin you.”

Earlier this year, a company called Anthropic created an AI assistant they named “Claude”. Anthropic was shocked that rather than follow instructions, “Claude” chose to cheat. And when caught, opted to cheat in more complex ways to hide the trail.

Dario Amodei, the owner of the Claude AI program, is warning that the government must establish clear guardrails before it is too late:

“You could end up in the world of, like, the cigarette companies, or the opioid companies, where they knew there were dangers, and they didn’t talk about them, and certainly did not prevent them.”

Amodei points out that within five years, half of all entry-level white-collar jobs could be wiped out. No politicians seem willing to confront that issue.

As we move into the realm of “super intelligence,” the possibility of disaster and existential threat becomes increasingly elevated. That’s not me talking. It’s a warning from those who know the potential for good and for harm of this new frontier.

Toby Ord, an advisor to Google DeepMind, believes the existential threat to humanity, that is the threat of mass extinction, is roughly 10%. And that is only because he thinks humanity will get its act together and bring in regulations.

So far, that hasn’t happened.

Geoffrey Hinton, the Nobel Prize-winning “godfather of AI,” puts the threat upward at 50% — a flip of a coin.

Imagine an industry where its own CEOs admit that the chances of wiping out humanity sits somewhere between 10 and 50%. Surely, you would think that governments would step in and lay some ground rules. And yet, nobody wants to be seen as the “party pooper.”

Yudkowsky and Soares end their book with an apocalyptic warning that sounds just like Terminator character John Connor. They simply state: “Shut it down.”

Shut it down until there are global guardrails in place.

Shut it down because we have no idea what we are messing with.

Such a call seems unlikely given the full on excitement among politicians and business leaders to get in the advance position on the coming AI revolution. Nonetheless, there are a few historic examples of when humanity came together to address the existential threats of a dread new frontier.

Following the First World War, the use of poison gas was outlawed, and this international law has largely remained in force.

During the Cold War, scientists proposed the “cobalt bomb” — a nuclear weapon that could spread elevated radiation to the point it could poison all life on Earth. Yet, even as the superpowers built death machine after death machine, no one dared cross that final line.

In 1988, a global treaty was signed in Montreal that ended the use of freon, which was threatening to destroy the ozone layer. That treaty has held. Life on earth was saved.

We have a very narrow window to take the issues of AI seriously, to demand oversight and put guardrails in place.

This is not Friday night at the movies. And it’s not science fiction.

This is the world our children will inherit.

Thank you for reading Charlie Angus / The Resistance. If you’d like to upgrade to a paid subscription your support will help keep this project independent and sustainable. I’m grateful to have you here - thank you for your support.

No comments:

Post a Comment